All Categories

Featured

Table of Contents

- – Modern Content Management: A Revolution for Sc...

- – Exploring the Integration of Aesthetics plus E...

- – When Continuous Enhancement with Analysis Bec...

- – The Competitive Edge of Strapi for Complex Di...

- – Secure Architecture Standards for Business-C...

- – How Consistent Evolution with Assessment Rem...

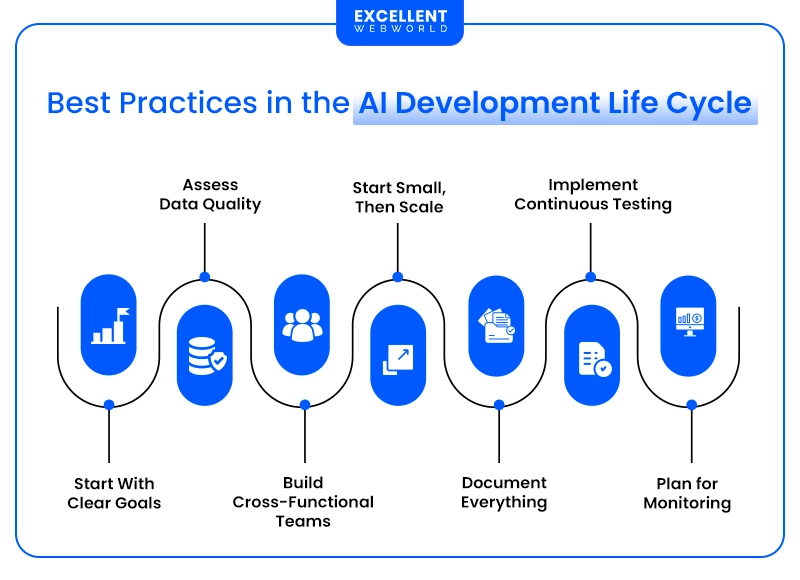

It isn't a marathon that demands research, evaluation, and testing to identify the function of AI in your service and guarantee secure, moral, and ROI-driven solution release. To assist you out, the Xenoss team created a simple framework, discussing exactly how to build an AI system. It covers the crucial considerations, challenges, and aspects of the AI task cycle.

Your goal is to determine its duty in your operations. The most convenient method to approach this is by going backwards from your objective(s): What do you desire to achieve with AI implementation?

Modern Content Management: A Revolution for Scalable Digital Platforms

In the finance field, AI has proved its value for fraudulence discovery. All the obtained training information will certainly after that have to be pre-cleansed and cataloged. Use constant taxonomy to develop clear information family tree and then check how various users and systems utilize the provided information.

Exploring the Integration of Aesthetics plus Engineering in Current Digital Experiences

Additionally, you'll have to divide offered data into training, validation, and test datasets to benchmark the developed design. Mature AI advancement groups total the majority of the data administration refines with data pipes a computerized sequence of actions for data consumption, handling, storage space, and subsequent access by AI designs. Instance of information pipe design for information warehousingWith a durable data pipeline design, firms can process countless data records in nanoseconds in near real-time.

Amazon's Supply Chain Finance Analytics group, subsequently, enhanced its data design work with Dremio. With the existing configuration, the company established new remove transform load (ETL) workloads 90% faster, while inquiry rate enhanced by 10X. This, subsequently, made information extra accessible for hundreds of simultaneous individuals and artificial intelligence tasks.

When Continuous Enhancement with Analysis Become Important for Long-Term Growth

The training process is complex, too, and vulnerable to concerns like sample effectiveness, security of training, and tragic interference troubles, to name a few. Successful commercial applications are still few and primarily originated from Deep Technology companies. are the backbone of generative AI. By making use of a pre-trained, fine-tuned model, you can quickly train a new-gen AI algorithm.

Unlike typical ML frameworks for natural language processing, structure models call for smaller labeled datasets as they already have actually embedded knowledge during pre-training. That claimed, foundation models can still produce inaccurate and irregular outcomes. Specifically when put on domains or tasks that vary from their training information. Educating a foundation version from scratch additionally needs substantial computational resources.

The Competitive Edge of Strapi for Complex Digital Projects

Properly, the version does not create the preferred outcomes in the target environment due to distinctions in criteria or setups. If the version dynamically optimizes rates based on the total number of orders and conversion prices, yet these parameters substantially change over time, it will no longer supply precise suggestions.

Rather, most preserve a database of version variations and carry out interactive model training to progressively boost the top quality of the last product., and just 11% are efficiently released to production.

After that, you benchmark the communications to determine the design variation with the greatest precision. is an additional crucial technique. A version with too few functions has a hard time to adapt to variations in the data, while a lot of features can result in overfitting and worse generalization. Highly correlated attributes can also trigger overfitting and weaken explainability techniques.

Secure Architecture Standards for Business-Critical Digital Retail Systems

It's also the most error-prone one. Only 32% of ML projectsincluding refreshing designs for existing deploymentstypically reach implementation. Deployment success throughout various equipment learning projectsThe reasons for fallen short releases vary from lack of executive support for the job as a result of unclear ROI to technical troubles with guaranteeing stable design procedures under raised loads.

The group needed to guarantee that the ML model was highly readily available and offered highly personalized referrals from the titles offered on the customer device and do so for the platform's millions of customers. To guarantee high efficiency, the group made a decision to program design racking up offline and after that serve the outcomes once the user logs into their gadget.

How Consistent Evolution with Assessment Remain Vital for Future Achievement

It also assisted the company maximize cloud facilities prices. Ultimately, successful AI design releases come down to having efficient processes. Much like DevOps concepts of continuous integration (CI) and continual delivery (CD) boost the deployment of normal software program, MLOps enhances the rate, efficiency, and predictability of AI model releases. MLOps is a collection of actions and devices AI growth teams utilize to create a consecutive, automatic pipeline for launching new AI options.

Table of Contents

- – Modern Content Management: A Revolution for Sc...

- – Exploring the Integration of Aesthetics plus E...

- – When Continuous Enhancement with Analysis Bec...

- – The Competitive Edge of Strapi for Complex Di...

- – Secure Architecture Standards for Business-C...

- – How Consistent Evolution with Assessment Rem...

Latest Posts

YouTube Optimization for Mental Health Professionals

Navigating the Synergy of Creative Direction combined with Technical Implementation in Next-Generation Digital Products

Analyzing Body shop Pricing for Wellness Practices

More

Latest Posts

YouTube Optimization for Mental Health Professionals

Navigating the Synergy of Creative Direction combined with Technical Implementation in Next-Generation Digital Products

Analyzing Body shop Pricing for Wellness Practices